Explore the complete guide to an efficient rate limiting system design, from beginner concepts to advanced algorithms and architecture. Learn about the token bucket, sliding window log, distributed rate limiting, performance optimization, and more!

Building a Scalable Rate Limiting System From Basics to Advanced Design

Given today’s high-traffic applications and cloud-scale systems, the stakes are highest in managing how frequently a user or service accesses resources. This is where the heroism of rate limiting for system stability steps in, be it server overload prevention, ensuring usage fairness, or merely avoiding potential abuse, for these systems are foundational to any software architecture.

So, let’s begin by learning the basics before diving into the advanced aspects-complete with relatable real-world punchlines and reference diagrams that help make these concepts stick!

High-Level Design of Rate Limiting Systems

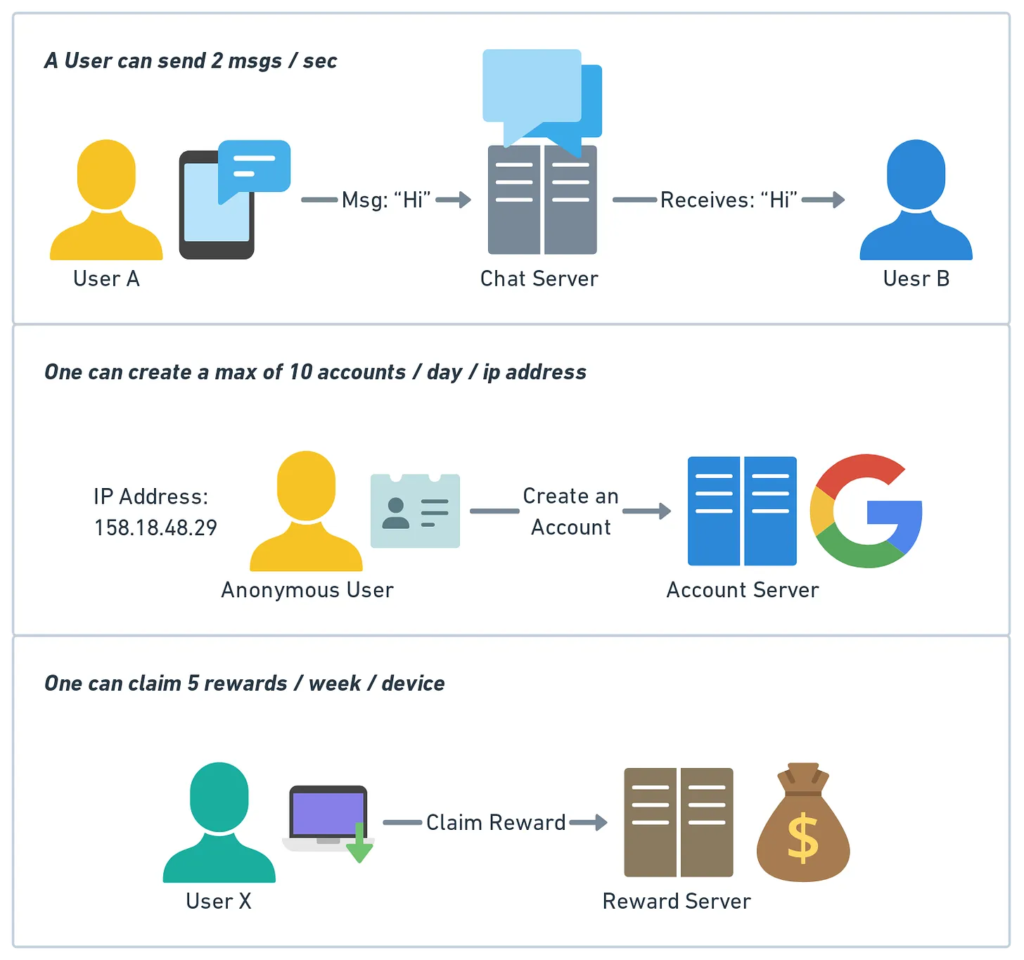

Rate limiting system design is like a bouncer outside a popular nightclub, checking the crowd so that nobody overpowers the space. In designing a rate limiter, the ultimate objective is to limit how many times a user or a service can make requests over a given timeframe. That may be applied at one of several levels:

- User-level: Controls individual user access.

- Service-level: Controls access across different services.

- IP-level: Regulates requests from specific IPs.

At the high level, rate limiting system design generally include

1. Counter, or tracking mechanism: tracks the number of requests.

2. Limit and time configuration: Indicates limits such as “5 requests per second.”

3. Overflow Action – Specifies what should happen on an overflow, such as a rate-limiting or denial.

Consider a public library that limits visitors to just 3 books per visit in order to ensure others have the opportunity to take those books too. That is a rate limiting system design at work, protecting the resource while allowing fair usage.

Rate limiting video tutorial

Rate Limiting Algorithms

The mechanisms of rate limiting are based on algorithms describing how we track requests and implement limits. Some of the more common approaches are listed here:

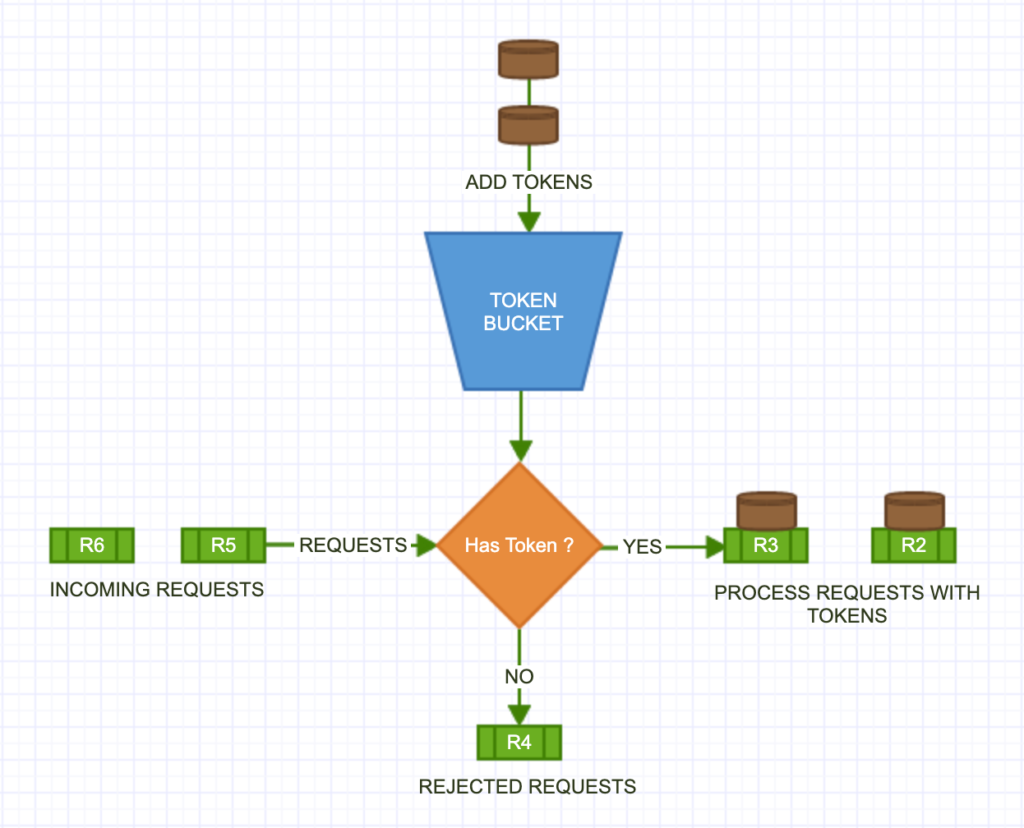

Token Bucket

The token bucket algorithm acts like a tollbooth allowing cars (requests) as long as there are tokens in the bucket, representing the permissions. The bucket has tokens refilled at specific intervals, thereby ensuring an average rate of requests, while bursts can still happen within a defined limit.

Pro: Supports bursts without any compromise on average rate.

Con: Requires proper configuration of token replenishment rates.

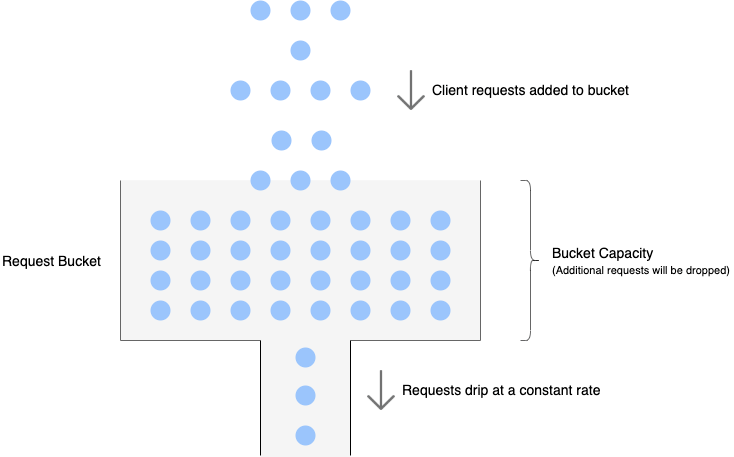

Leaking Bucket

The requests flow into the “bucket” in the leaking bucket algorithm. However, at a constant rate, it leaks like the faucet. Those that overflowed are discarded.

Pro: It ensures a constant rate of output.

Con: Doesn’t do very well at handling big bursts.

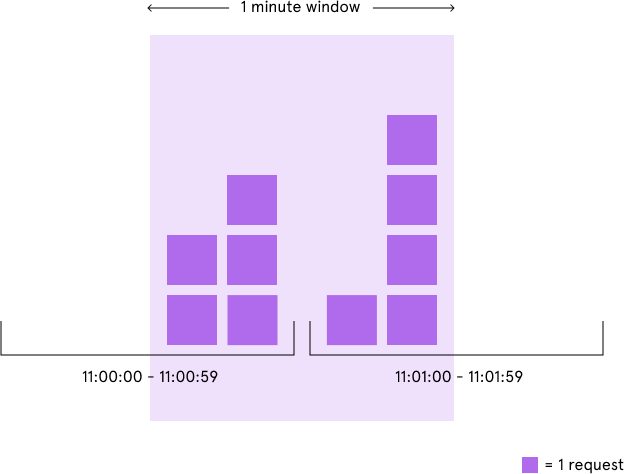

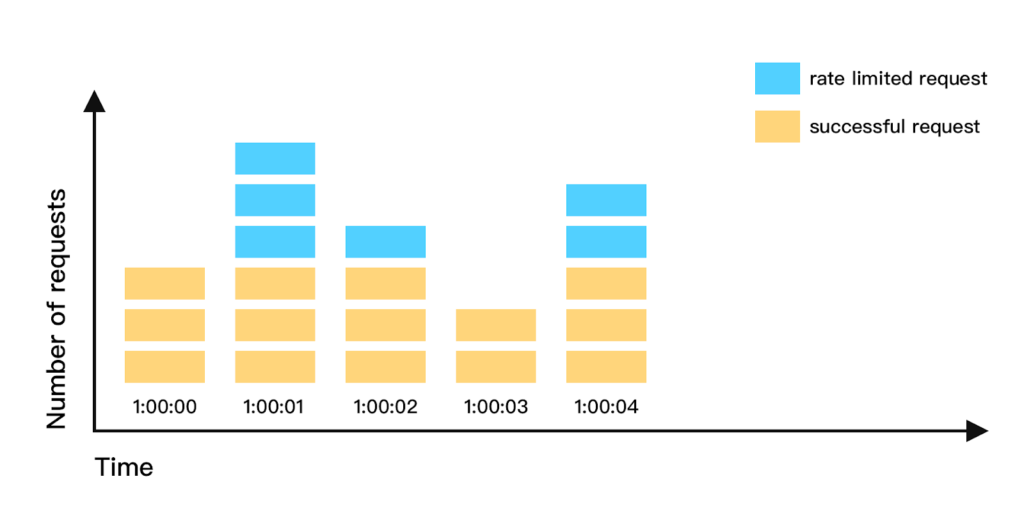

Fixed window counter

This approach divides time into fixed windows, like every second, and counts the number of requests in each window. When the count crosses the limit, requests are blocked until the next window.

Pro: Easy enough and efficient for low-complexity use cases.

Con: Spikes may occur at the window boundary due to boundary-related issues.

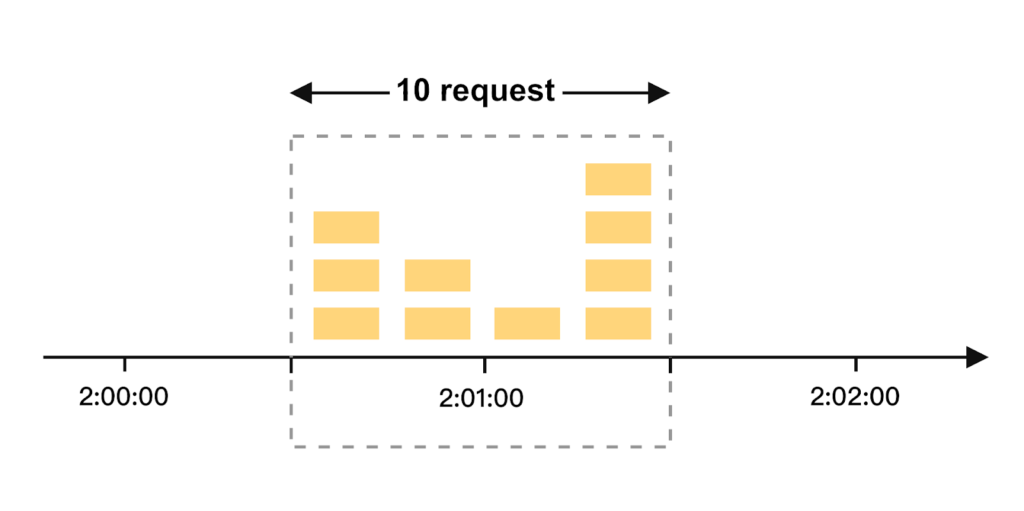

Sliding Window Log

It maintains time stamps for every request and discards the older ones as it slides further. This gives more explicit control over rate limits.

Pro: Tackles surges in demand efficiently.

Con: Memory usage rises because request timestamps must be kept.

Sliding Window Counter

A memory-efficient version of the sliding window log involves splitting each time window into sub-segments and computes an average rate using active counters.

Pro: Efficient in memory while handling spikes.

Con: Not as accurate as a sliding window log.

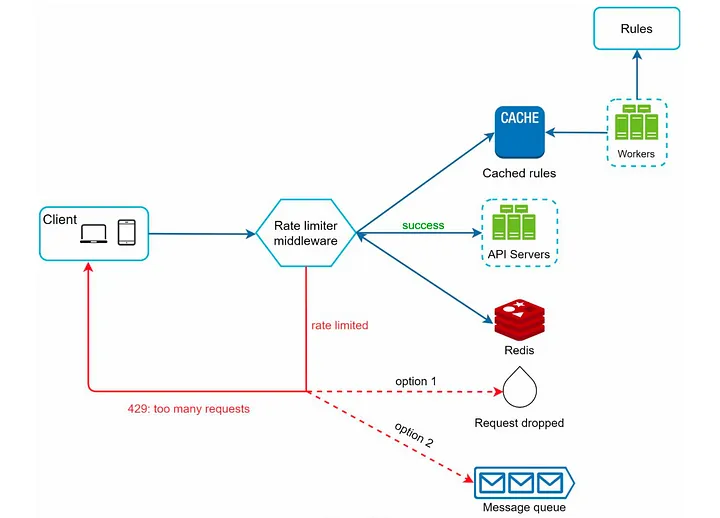

High-Level Architecture

In general, the architecture of a rate-limiting system is important to its performance and scalability. Common components include:

- Intercept requests: It receives incoming requests and forwards them to the rate limiter.

- Rate LImiter: This class implements one of the above algorithms that control the count of requests.

- Data Storage: Stores counters and timestamps. Redis is often used because of its fast read/writes in most cases.

- Response Handler: responds to requests based upon the limit checks, be it by allowing or blocking requests or sending some rate-limiting headers.

Deep Design Considerations

Rate Limiting Rules

Defining rules is vital for flexibility. For example:

- User-level vs. IP-level limits.

- Separate limits for read/write operations.

Exceeding Rate Limits

When users exceed limits, they need feedback

Rate Limiter Headers: Return headers with details like X-RateLimit-Limit, X-RateLimit-Remaining, and X-RateLimit-Reset to inform users about their status.

Rate Limiting in a Distributed Environment

Distributed configurations make it hard to ensure synchronized counters since multiple servers deal with requests. Techniques such as consistent hashing and distributed data stores like Redis Cluster, and DynamoDB ensure that any given request maps to the same counter consistently.

Challenges include:

- Data Replication- Maintains counters consistent across multiple servers.

- Network latency: Affects synchronization speed and accuracy.

Performance Optimization

- Rate limiting, if not tuned properly, can become the bottleneck:

- Caching counters: This stores frequently accessed counters in memory.

- Asynchronous processing: Defer some requests for smoother processing.

- Clustered databases: Use databases like Redis in a clustered mode to get faster access to the data.

Monitoring and Analytics Monitoring is also critical

Monitoring is essential in rate-limiting system design. Set up metrics to track:

- Request rates per endpoint.

- Blocked requests to analyze user behavior.

- Latency of rate limiter checks.

- Monitors, such as Grafana, Prometheus, or Datadog, can be used for observability. These could alert on anomalies or surges in request rates to ensure smooth operations.

Putting it All Together

Algorithmic design with architectural best practices and performance tuning will combine to form an effective rate limiting system design. Every step, from choosing the right algorithm to handling distributed setups and monitoring, contributes to a robust and scalable rate limiter.

A rate limiter is like a busy hours policy at a coffee shop-they’ll serve the coffee to everybody but not all at once, so everybody leaves caffeinated, not cranky!” With balancing control with flexibility, your rate limiter can ensure smooth access even under heavy load.