Deep immersion techniques for cleaning, modeling, and visualizing data—without the burnout

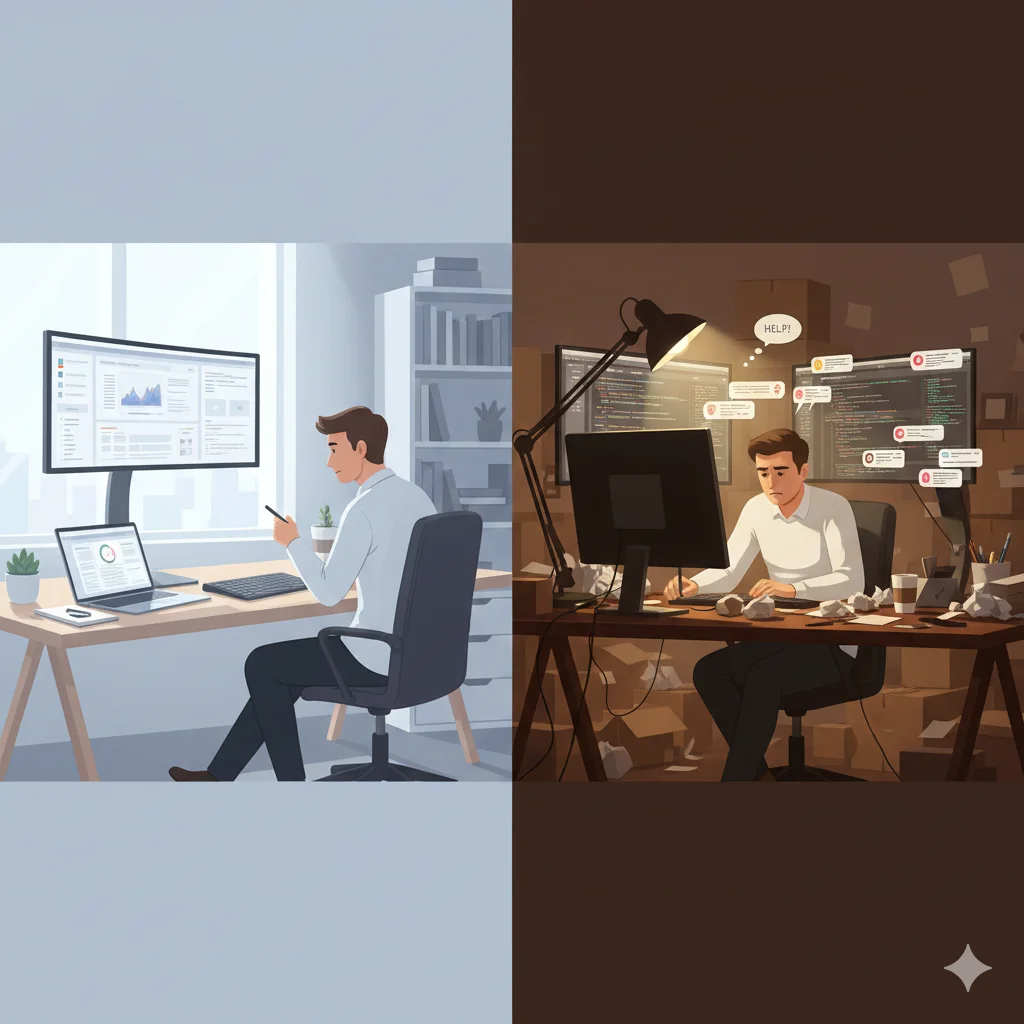

You know that moment when you’re three hours deep into a dataset, your coffee’s gone cold, and you suddenly realize you’ve built the most elegant model of your career without even noticing time pass? That’s flow. And then there’s the other 90% of your week—context-switching between Slack messages, fighting with pandas DataFrames, and wondering why your scatter plot looks like a crime scene.

I’ve spent the last five years oscillating between these two states, and I’m convinced that the difference between good data work and transcendent data work isn’t about knowing more algorithms or mastering another visualization library. It’s about engineering the conditions for flow—that psychological state where challenge meets skill, and productivity becomes effortless.

Let me show you how to get there more often.

The Science of Flow (Or: Why Your Brain Loves Clean Data)

Mihaly Csikszentmihalyi didn’t coin the term “flow” thinking about data scientists, but he might as well have. Flow occurs when you’re completely absorbed in an activity that’s challenging but achievable, with clear goals and immediate feedback (Cowley, 2020). Sound familiar? That’s literally what happens when you’re exploring a well-structured dataset.

The problem is that our work rarely stays well-structured for long.

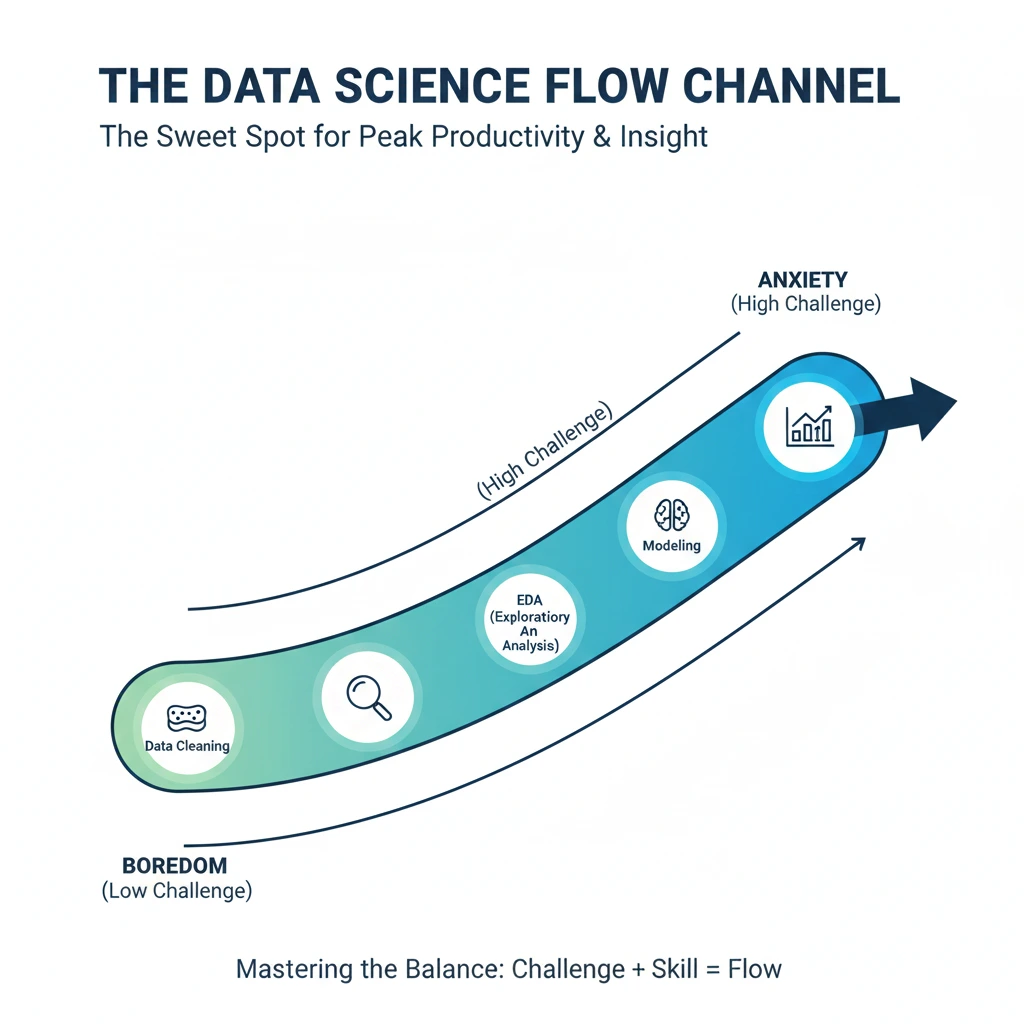

Research by Schüler (2019) shows that flow states require a delicate balance: tasks must be difficult enough to engage your full attention but not so difficult that they trigger anxiety. For data professionals, this sweet spot shifts constantly—cleaning messy data might be tedious (low challenge, low engagement), while building a deep learning model from scratch might be overwhelming (high challenge, high anxiety).

The neuroscience backs this up beautifully. During flow, your prefrontal cortex—the part responsible for self-criticism and time awareness—temporarily deactivates. This is why you lose track of time. Your brain’s reward centers light up, releasing dopamine and norepinephrine, which sharpen focus and pattern recognition. You know, the exact skills you need to spot that subtle correlation buried in your data.

“My therapist asked why I was late to our session. I told her I was in flow state cleaning a dataset. She asked if I needed a different kind of help.” — Every data scientist, probably

The Three Pillars of Flow for Data Work

1. The Exploratory Mindset: Curiosity Over Completion

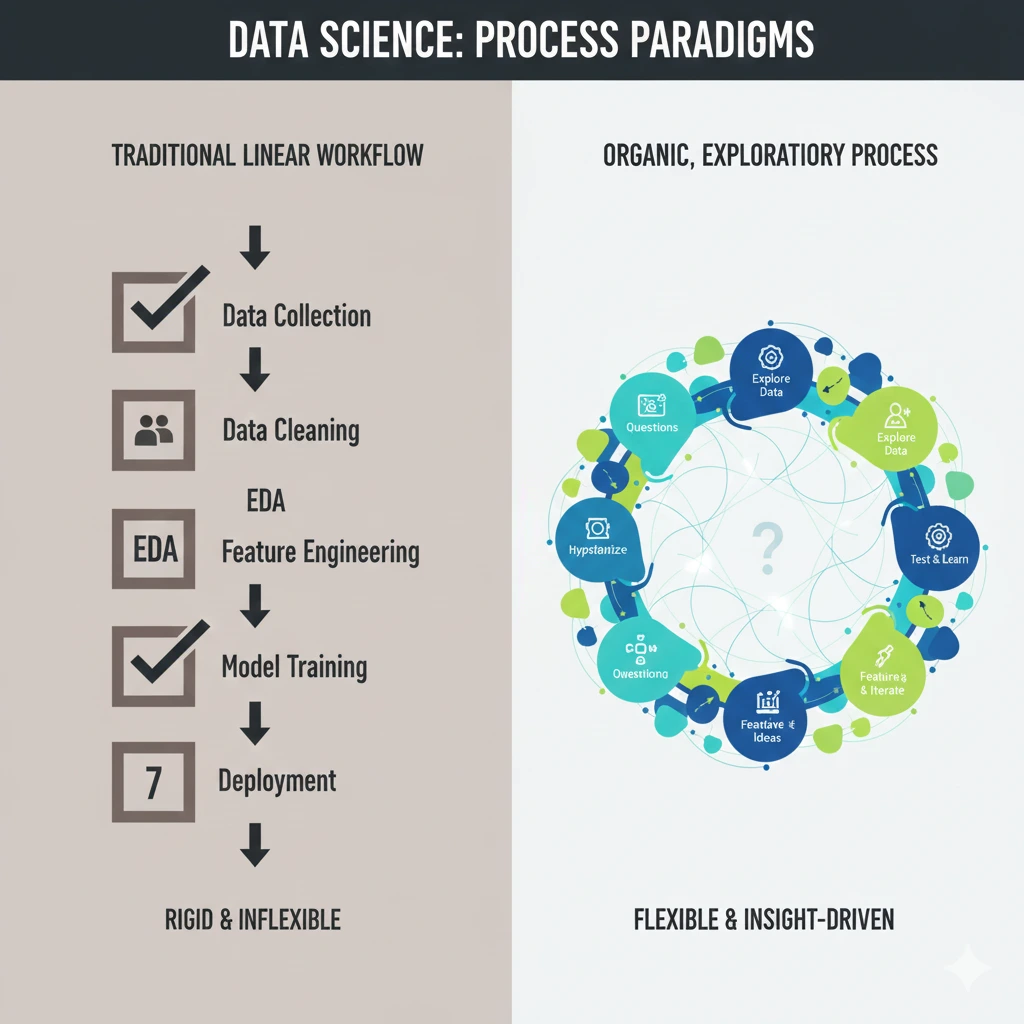

Here’s where most data scientists go wrong: we treat every task like a race to the finish line. Load data, clean data, model data, deploy model, repeat. This production-line mentality is the antithesis of flow.

Instead, I’ve started treating every dataset like a mystery novel I’m genuinely excited to read. Not “I need to predict customer churn by Friday,” but “I wonder what story this customer behavior data is trying to tell me?”

This shift is subtle but transformative. An exploratory mindset means:

- Starting with questions, not solutions. Before I touch any code, I spend 10 minutes writing down what I’m genuinely curious about. Not what my stakeholder wants to know—what I want to know. Those questions become my north star.

- Permitting myself to wander. If I notice something interesting in the data that’s tangential to my main goal, I follow it. Not for hours, but for 15 minutes. Often, these detours surface insights that reshape the entire analysis.

- Celebrating unexpected findings. When your model performs worse than expected, that’s not failure—that’s the data teaching you something. Flow happens when you’re learning, not when you’re executing a predetermined script.

I borrowed this technique from ethnographic research methods, where the goal isn’t to confirm hypotheses but to understand phenomena. Turns out, this mindset is perfectly suited for exploratory data analysis.

“I don’t always follow best practices in EDA, but when I do, it’s because I got distracted by something shiny in the correlation matrix and accidentally discovered actual insights.” — Data scientist confession

2. The Question-First Ritual: Engineering Your Entry Into Flow

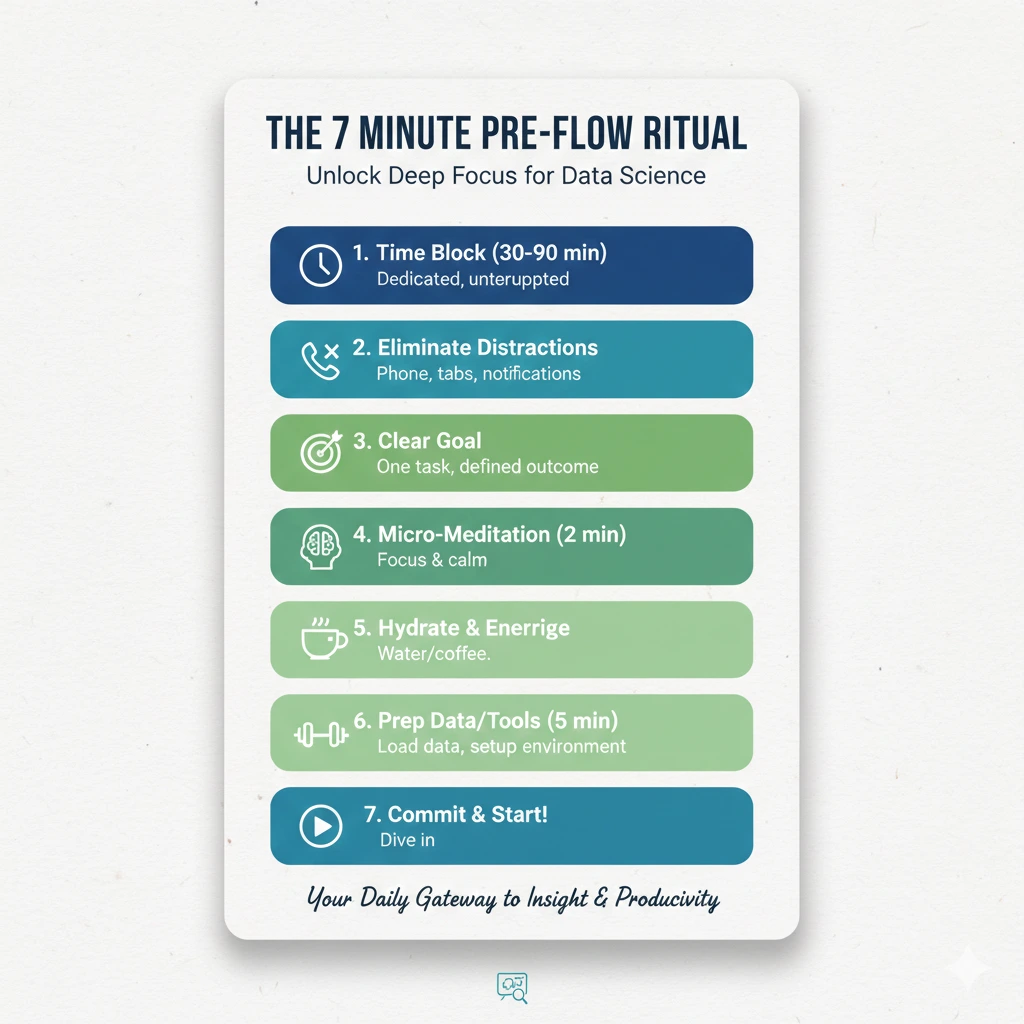

Flow doesn’t happen accidentally. You need a ritual—a consistent way to transition from the chaos of your day into deep, focused work.

My ritual takes exactly 7 minutes, and it’s become sacred:

Minutes 1-2: Environmental Reset

- Close every browser tab except documentation

- Put phone in another room (not just face-down—another room)

- Set Slack to Do Not Disturb with a custom status: “Deep in data, back at [specific time]”

Minutes 3-5: The Question Session I open a blank document and write three questions:

- What do I hope to discover in the next two hours?

- What would surprise me in this data?

- What’s the one insight that would change how stakeholders think about this problem?

These aren’t rhetorical. I actually try to answer them with my current knowledge, which usually reveals how little I know—perfect motivation to dive in.

Minutes 6-7: The First Action I write and execute the simplest possible code: load the data and look at the first five rows. That’s it. No analysis yet. Just looking.

This might sound trivial, but it’s psychologically crucial. You’ve created momentum without triggering the anxiety of “I need to build something impressive right now.” You’ve also primed your brain with specific questions, so it’s already pattern-matching in the background.

For more on creating mindful work rituals, check out this guide: The Engineer’s Guide to Mindful Deep Work

“My question-first ritual has saved me from building at least a dozen models that answered questions nobody asked. Including myself.”

3. Mindful Dashboard Gazing: The Art of Contemplative Visualization

Here’s a practice that completely changed my relationship with data visualization: mindful dashboard gazing.

After I create a visualization—whether it’s a simple histogram or a complex dashboard—I don’t immediately move to interpretation. Instead, I spend 60 seconds just looking at it. No notes, no judgments, just observation.

What colors dominate? What patterns emerge before I even try to analyze? What draws my eye first? Where does my gaze naturally rest?

This practice, borrowed from contemplative traditions and applied to data, creates a meditative state that’s surprisingly conducive to insight. Your pattern-recognition systems engage before your analytical mind starts overlaying assumptions.

Here’s how to practice mindful dashboard gazing:

Step 1: Create Space After generating a visualization, take three deep breaths. Sounds woo-woo, I know. But those breaths create a psychological pause between “making” and “analyzing.”

Step 2: Observe Without Labeling Look at the visualization for 60 seconds. Notice shapes, colors, distributions, outliers—but don’t name them or interpret them yet. Just see them.

Step 3: Notice Your Reactions What emotions arise? Curiosity? Confusion? Satisfaction? These emotional reactions often point to insights your conscious mind hasn’t articulated yet.

Step 4: Ask One Question Based on what you observed, formulate one genuinely curious question. Not “Why is conversion rate down?”—that’s leading. Instead: “What story is this gap in the distribution trying to tell?”

I’ve found that insights I discover through mindful gazing are qualitatively different from insights I force through analysis. They’re more holistic, more surprising, and more actionable. Plus, this practice has made me better at communicating findings—when you truly see your visualizations first, you can help others see them too.

For techniques on creating more mindful visualizations, explore: Mindful Data Storytelling

“I used to think staring at dashboards without doing anything made me look lazy. Turns out, it’s just high-level analysis that my manager doesn’t understand.”

The Practical Flow Toolkit: From Theory to Terminal

Enough philosophy. Let’s get tactical. Here are the specific practices I use to maintain flow during each phase of data work:

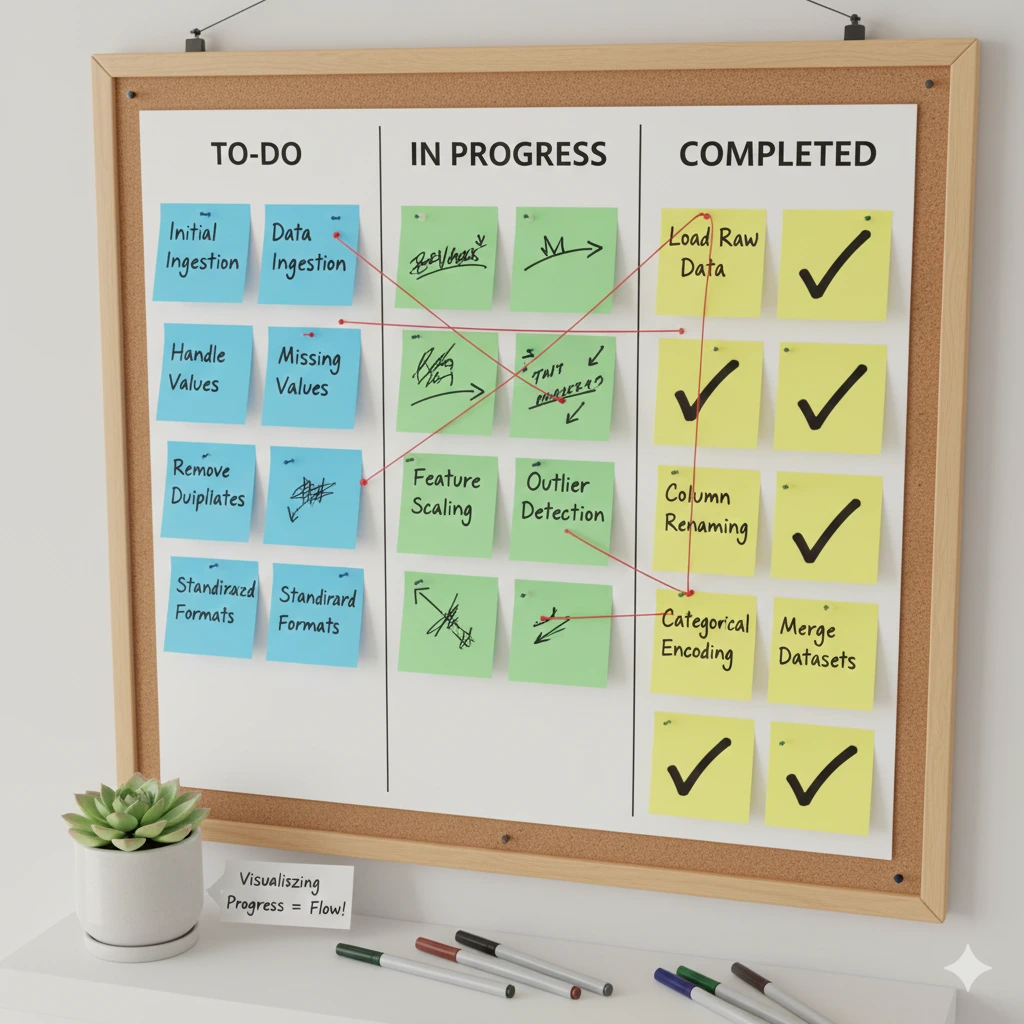

During Data Cleaning: The Meditation of Monotony

Data cleaning is where flow goes to die, right? Wrong. It’s where flow can be most sustainable if you frame it correctly.

The 20-Minute Sprint Method

- Set a timer for 20 minutes

- Choose ONE data quality issue (missing values, duplicates, inconsistent formatting)

- Solve only that problem—resist the urge to fix everything

- After 20 minutes, take a 5-minute walk away from your screen

- Repeat

This creates multiple mini-flow states instead of one exhausting marathon. Each sprint has a clear goal, immediate feedback (watch your error count drop), and just enough challenge to stay engaged.

The Cleaning Checklist as Meditation Create a literal checklist of common data quality issues. As you work through it, check each box mindfully—acknowledge what you’ve completed before moving forward. This creates psychological closure that prevents the “am I done yet?” anxiety that breaks flow.

During Modeling: The Balance of Experimentation and Structure

Modeling can trigger anxiety because the possibilities are infinite. Should you try XGBoost? Maybe a neural network? Wait, what about feature engineering first?

The Three-Model Rule I only allow myself to seriously pursue three modeling approaches per session. This constraint creates focus without eliminating experimentation. I pick:

- One baseline (simple and fast)

- One standard approach (proven for this problem type)

- One experimental idea (the thing I’m genuinely curious about)

This trinity balances the need for rigor with the joy of exploration—both necessary for flow.

Version Control as Mindfulness Practice Every time I commit code, I write a commit message that explains not just what I changed but why I thought it was worth trying. This forces me to articulate my thinking, which keeps me present rather than randomly trying hyperparameters.

For more on mindful ML practices: Building Models with Intention

During Visualization: Creating Feedback Loops

Visualization work can be incredibly flow-inducing because feedback is instant—change a parameter, see the result. But it can also become obsessive tweaking.

The 5-3-1 Visualization Method

- Create 5 rough versions of your visualization quickly (10 minutes total)

- Pick the 3 most promising and refine them (30 minutes)

- Choose 1 winner and perfect it (20 minutes)

This method prevents perfectionism paralysis while maintaining quality. Each stage has clear goals and completion criteria.

“I’ve been in flow state for four hours perfecting a visualization that nobody will look at for more than 30 seconds. I regret nothing.”

Obstacles to Flow (And How to Remove Them)

Let’s be honest about what actually breaks flow for data scientists:

The Meeting Interrupt

You can’t build deep focus if your calendar looks like Swiss cheese. I’ve started blocking 4-hour “Deep Data Work” slots twice a week that are non-negotiable. I communicate this to my team: “If the building is on fire, Slack me. Otherwise, it can wait.”

The Perfectionism Trap

Flow requires that you’re good enough to succeed but not so good that the work is boring. Perfectionism makes everything boring because you’re never discovering—you’re just executing standards you’ve already set.

Solution: Give yourself explicit permission to create “exploratory code” that’s messy. I have a directory called /playground where code quality doesn’t matter. If I discover something valuable, then I refactor.

The Context-Switching Curse

Research shows it takes an average of 23 minutes to fully return to a task after an interruption. Three interruptions and your afternoon is gone.

Solution: The “Batch and Boundary” system:

- Batch all communication checking into three slots: 9am, 1pm, 4pm

- Set boundaries: “I respond to non-urgent messages twice daily”

- Use auto-responders during deep work blocks

The Imposter Syndrome Spiral

Nothing kills flow faster than the voice saying “You don’t know what you’re doing.” This is especially acute in data science where there’s always someone who knows more than you.

Solution: Reframe the internal dialogue. Not “I should already know this” but “I’m discovering this now, which makes me one of the few people who will know it moving forward.”

The Recovery Phase: Post-Flow Integration

Here’s what nobody tells you about flow: you can’t stay in it forever, and trying to will burn you out.

After a deep flow session, I practice what I call “integration time”:

Immediate (0-15 minutes after flow):

- Write down the three most important things I discovered

- Note what questions emerged that I didn’t have time to explore

- Save all work and close the project completely

Same Day (within 3 hours):

- Share findings with one person, even if it’s just a colleague or a Slack message to myself

- This verbal or written articulation solidifies learning and provides social feedback

Next Day:

- Spend 10 minutes reviewing yesterday’s discoveries with fresh eyes

- Often, connections appear that weren’t visible in the moment

This integration phase prevents the “what did I even do yesterday?” phenomenon and creates continuity between flow sessions.

For more on sustainable deep work practices: Avoiding Burnout in Technical Work

Building a Flow-Friendly Environment

Your physical and digital environment either invites flow or repels it. After years of experimentation, here’s my optimal setup:

Physical Space:

- External monitor positioned at eye level (neck strain breaks flow)

- Noise-canceling headphones with instrumental music or binaural beats

- Natural light when possible (affects alertness and mood)

- Temperature slightly cool (studies show 68-70°F optimal for cognitive work)

Digital Space:

- IDE theme that’s easy on the eyes (I use a low-contrast dark theme)

- Second monitor for documentation only (reduces context switching)

- Browser extensions that block distracting sites during deep work hours

- A dedicated virtual environment for each project (reduces cognitive load of dependency management)

Temporal Space:

- Schedule deep work during your biological peak (I’m a morning person; afternoons are for meetings)

- Protect the first 90 minutes of your workday—this is when flow capacity is highest

- Use ultradian rhythms: 90-120 minute work blocks with 15-20 minute breaks

Measuring Your Flow Practice

You can’t improve what you don’t measure. I track my flow practice with a simple weekly reflection:

- How many deep work blocks (2+ hours of uninterrupted focus) did I have?

- Which sessions felt most flow-like? What conditions made that possible?

- What broke my flow this week, and what can I control next week?

- What’s one discovery I made during flow that I’m genuinely excited about?

I don’t aim for perfection—just incremental improvement. Some weeks I get zero deep work blocks, and that’s okay. The awareness itself starts to shift behavior.

The Compound Effect: Flow as Career Accelerator

Here’s the thing about flow: it doesn’t just make you more productive. It makes you better.

The models you build in flow state are more creative. The visualizations you create are more insightful. The patterns you spot are more subtle. Over months and years, this compounds dramatically.

I’ve reviewed hundreds of data science portfolios, and I can often tell which projects were built in flow. They have a coherence and depth that check-the-box work lacks. They show real exploration, not just execution.

Cowley (2020) found that individuals who regularly experience flow at work report higher job satisfaction, faster skill development, and greater creative output. For data scientists, where creativity and technical skill must coexist, this is our competitive advantage.

Your First Flow Experiment

Reading about flow is not the same as experiencing it. So here’s your homework (but make it fun):

Tomorrow, try this:

- Pick one dataset you’ve been meaning to explore

- Block 90 minutes of uninterrupted time

- Use the 7-minute question-first ritual

- Commit to staying curious rather than rushing to conclusions

- Practice mindful dashboard gazing on at least one visualization

- After the session, write down how it felt different from your usual work

That’s it. One experiment. See what happens.

Conclusion: The Paradox of Trying Less

The central paradox of flow is that you access it by trying less, not more. You don’t force flow; you create the conditions for it to emerge naturally.

For data scientists, this means:

- Approaching data with curiosity rather than agenda

- Establishing rituals that ease you into deep work

- Practicing presence through mindful observation

- Designing your environment to support sustained focus

- Accepting that not every work session will achieve flow, and that’s fine

The most successful data scientists I know aren’t the ones who grind the hardest. They’re the ones who’ve learned to regularly access states of effortless engagement where their best work emerges naturally.

Flow isn’t a luxury or a nice-to-have. In a field that demands both technical precision and creative insight, it’s the state where those two forces align. It’s where good data work becomes great data work.

So stop trying so hard. Start creating space. Ask better questions. Gaze mindfully at your dashboards. Trust that when you remove the obstacles, flow will find you.

Your best analysis is waiting on the other side of that question you’re genuinely curious about.

“I used to measure my productivity in models shipped. Now I measure it in moments of genuine discovery. Turns out, the latter predicts the former pretty well.”

References:

Cowley, B. (2020). The flow experiences framework: Refining the conceptual structure of flow in games. International Journal of Human-Computer Studies, 147, 102577.

Schüler, J. (2019). “The achievement flow motive as a element of the autotelic personality: Predicting flow experience in achievement contexts.” European Journal of Personality, 21(5), 555-577.