Zero-Shot, Few-Shot, and Chain-of-Thought Prompting Overview

Introduction

Prompt programming introduces you to some amazing AI concepts like few-shot learning, zero-shot learning, and chain-of-thought prompting. These techniques help in transforming how machines process information, learn from examples, and solve complex reasoning tasks. In this section, we’ll break down these terms into simple, human-friendly language, using real-life examples to make prompt programming easier to understand.

Introduction to Few-Shot and Zero-Shot Learning

Few-shot and zero-shot learning are key techniques in AI which help models learn faster and more efficiently. But before exploring these specifics, let’s understand what these terms mean in the context of prompt programming.

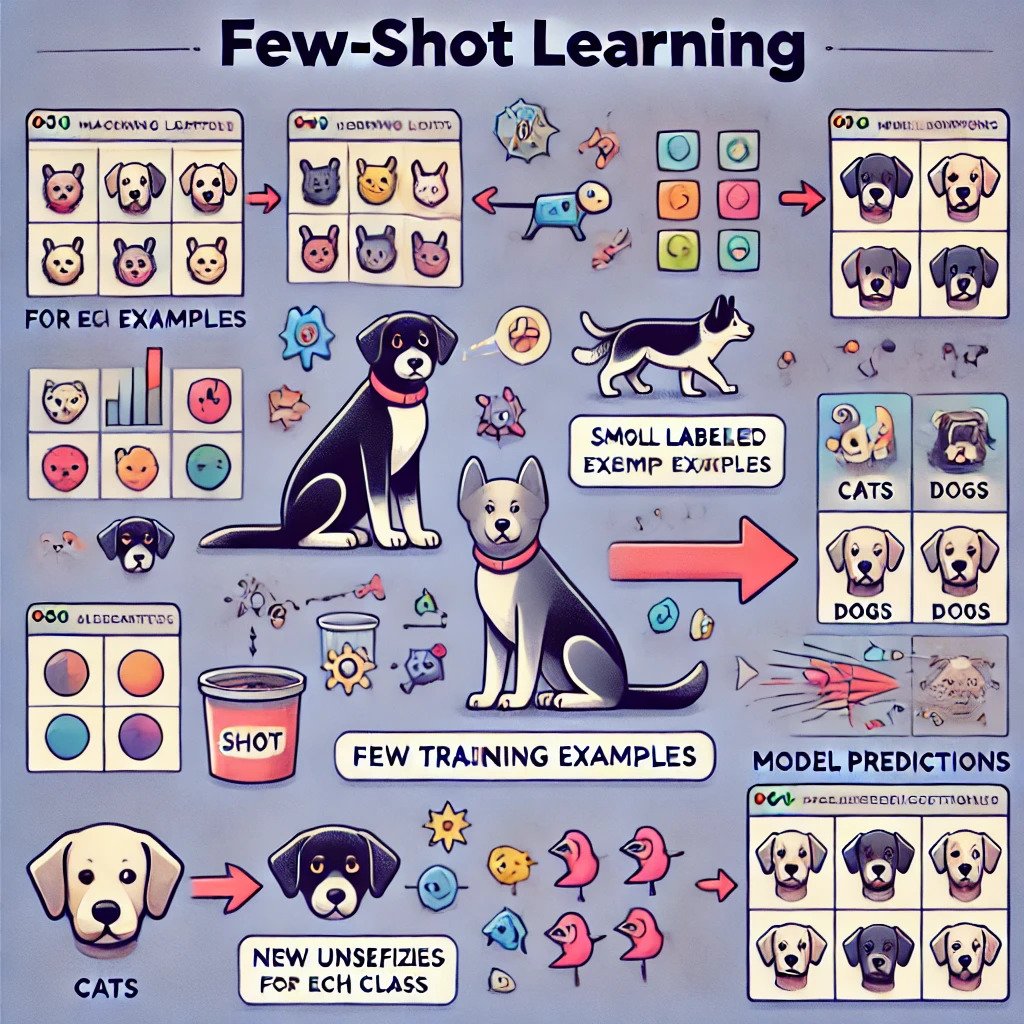

What is Few-Shot Learning?

Few-Shot Learning or FSL is a method in which a machine learning model can understand and make assumptions based on a few examples. Traditional models need much data to understand patterns, but few-shot learning thrives on less or little data. For instance, you’re teaching a child to identify a zebra. If you show them three or four pictures, they can already understand and know what a zebra looks like when they see it again. Few-shot learning works in a pretty same manner. The model takes a small number of labelled examples and learns from them.

Example: Few-Shot Learning in Action

For instance, you want AI to understand handwritten digits. Instead of feeding it thousands of examples, you only give five images per digit. The model uses these few examples to understand and classify new, unseen handwritten digits correctly. This makes few-shot learning extremely effective for situations where obtaining large datasets is difficult.

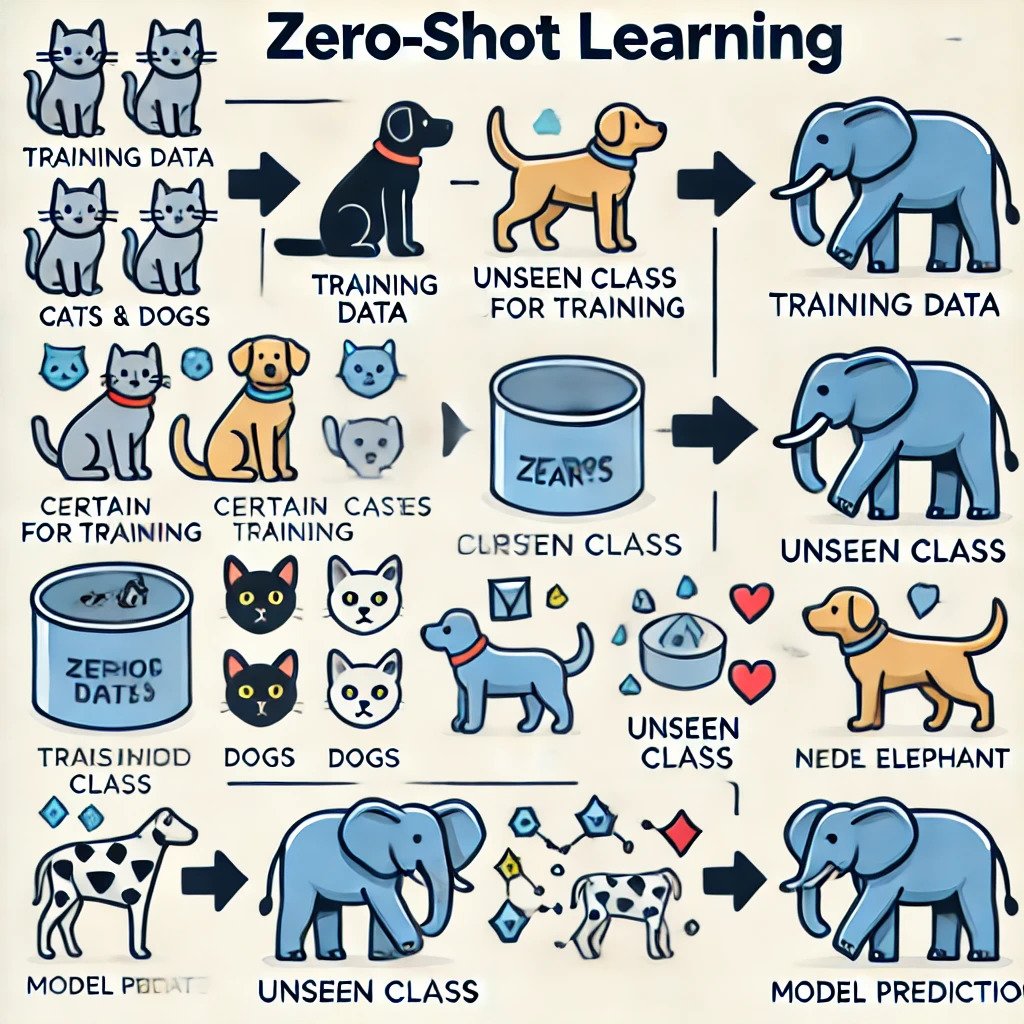

What is Zero-Shot Learning?

Zero-shot learning takes things a step further—it permits a model to classify or understand tasks it has never seen before. Instead of learning directly from labelled examples, the model uses semantic information or context to understand the new task.

Example: Zero-Shot Learning in Action

Let’s say you’ve trained an AI model to recognize common animals like dogs and cats. Now, you introduce a new animal, like a panda, without giving any training data. Zero-shot learning enables the model to make connections based on the features it knows about other animals (e.g., it’s furry, has four legs, etc.) and correctly label the panda, even though it hasn’t been trained on panda images.

Difference between Few-Shot and Zero-Shot Learning

| Adapts to new tasks with fewer examples | Few-Shot Learning | Zero-Shot Learning |

| Training Data | Needs a small set of labeled examples | Needs no labeled examples for the target task |

| Prior Knowledge | Leverages limited task-specific data | Relies heavily on pre-existing knowledge or embeddings |

| Flexibility | Adapts to new tasks with less examples | Can perform completely new tasks without examples |

| Performance | Generally more accurate for specific tasks | It may be less accurate but more versatile |

| Application Scenarios | Useful when a few labeled examples are available | Ideal for tasks where no labelled data is available |

Implementing Chain-of-Thought Prompting

Chain-of-thought prompting is a technique that allows AI models to break down their reasoning step-by-step. Rather than answering a question immediately, the model creates a “thought process” that leads to the final answer. This reflects how humans solve queries—by reasoning through each step logically.

What is Chain-of-Thought Prompting?

It is a technique used in prompt programming where the AI is guided to break down a task into smaller, logical steps. Instead of getting an immediate answer, the model takes a step-by-step approach to finding the solution. This allows for better outputs in prompt programming scenarios.

How Does Chain-of-Thought Prompting Work?

The model takes a complex query, divides it into smaller, more manageable steps, and works through each one until it gives a solution. This is helpful for tasks that need multi-step reasoning, such as math problems or logical puzzles.

Importance of Chain-of-Thought Prompting

Chain-of-thought prompting is important in prompt programming because it improves the quality of the AI’s responses. Here’s why:

- Improves Accuracy: When the AI breaks tasks into smaller steps, it reduces errors.

- Boosts Clarity: Step-by-step thinking ensures that the process is clear and logical.

- Handles Complex Tasks: For tasks that need deep reasoning, like solving puzzles or answering multi-part questions, chain-of-thought prompting enables the model to think through each part.

In short, it’s a way of guiding the AI to think more like a human, improving both the reasoning and the output quality.

How to Implement Chain-of-Thought Prompting in Prompt Programming

Now that we know what chain-of-thought prompting is and why it’s important, let’s look at how to implement it. Here’s a simple step-by-step approach:

- Start with a Clear Prompt: Always begin with a prompt that clearly outlines the task. The AI needs to know exactly what it’s solving.

- Guide the AI with Logical Steps: Break down the task into smaller, manageable parts. For example, if it’s a calculation, ask the AI to handle one part at a time.

- Encourage Reasoning: Use phrases like “first,” “then,” and “finally” to guide the model through the steps. This helps the AI maintain a logical flow.

- Ask for Explanations: You can prompt the AI to explain each step of its thought process. This ensures that the reasoning is clear, and you can check for any mistakes in the logic.

Example: Chain-of-Thought Prompting in Action

Let’s say you ask an AI: “If Sally has 3 apples and buys 5 more, then gives 2 to John, how many apples she is left with?” Instead of directly answering “6,” the model breaks it down:

- Sally starts with 3 apples.

- She bought 5 more, so now she has 8.

- She gives 2 to John, leaving her with 6.

This chain-of-thought process improves accuracy because the model reasons through each step, reducing errors.

Solving Complex Reasoning Tasks

In this section, we’ll dive into solving more complex queries using the concepts of few-shot, zero-shot, and chain-of-thought prompting. This will challenge the AI to tackle reasoning tasks that need multiple steps and deeper understanding, showcasing the power of prompt programming techniques.

Complex Reasoning Task Example: AI Solving a Logical Puzzle

Let’s consider a famous puzzle: “There are three boxes, one labelled ‘Apples,’ one labelled ‘Oranges,’ and one labelled ‘Apples and Oranges.’ All the labels are wrong. How do you correctly label the boxes if you can only pick one fruit from one box?”

Step-by-Step Solution

- Pick a fruit from the box labelled “Apples and Oranges.”

- Suppose you pick an orange. This means that the box is not “Apples and Oranges,” so it must be the “Oranges” box.

- Now, relabel the box marked “Oranges” as “Apples.”

- The remaining box, previously labelled “Apples,” must contain both fruits.

By breaking down each step, AI can come up with the correct solution using a chain-of-thought process.

Conclusion

Few-shot and zero-shot learning, along with chain-of-thought prompting, are powerful tools in the world of AI. These techniques allow models to handle complex tasks with less data, think through problems correctly, and even solve puzzles they’ve never seen before. As you continue to explore prompt programming, you’ll discover how these concepts can be applied to create more efficient and effective AI models.

Quiz Time!

What is the key difference between few-shot and zero-shot learning?

- Few-shot learning requires no examples, while zero-shot learning uses a few examples.

- Few-shot learning requires a few examples, while zero-shot learning uses none.

- Both require extensive labelled data.

Follow our LinkedIn page for never-ending AI and Tech updates!